Overview

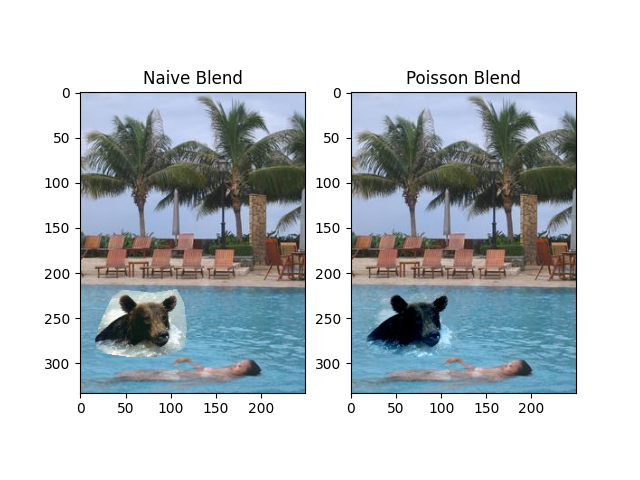

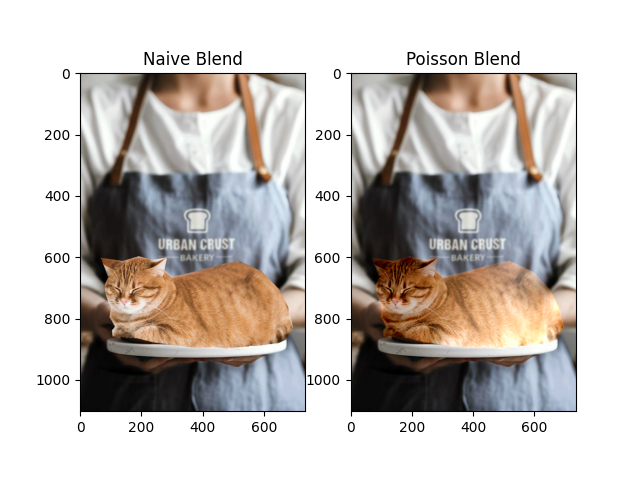

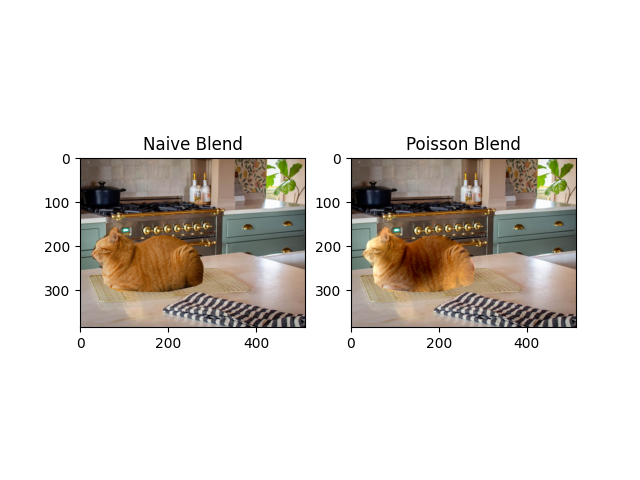

In this project, I explore gradient domain processing, a technique that manipulates the gradients of an image rather than its raw pixel values. This method is widely used for tasks like seamless blending, tone mapping, and non-photorealistic rendering. The core of this project is Poisson blending, which allows me to integrate an object from a source image into a target image by preserving its gradient structure while adapting to the target’s surroundings. Unlike a simple copy paste method, which creates harsh seams, this approach makes transitions smooth and gives realistic integration. By focusing on gradient differences, I found that I can blend objects naturally, even when their intensity or lighting conditions differ. In addition to Poisson blending, I explore failure cases and some post processing solutions.